Data Centers and their performance criteria are becoming more important with the increasing dependency on Information Technologies. The main responsibility of these facilities, which collocate servers, storages, and network devices, is to ensure that these devices operate with high business continuity, high performance, and high reliability during their expected service life (lifecycle). On the other hand, the cost of power consumption in this critical facility is one of the highest operating expenses.

The power consumption of each system in the data center facility is naturally among the topics broadly discussed by the data center facility management as well as by the suppliers providing products to the facility. The facility management is expected to manage power consumption in order to limit both utility cost and environmental footprint.

As the main principle, efficiency is a parameter required to be managed and a strategy should be established based on sufficient data collected in a certain frequency. Efficiency Management is required to be performed aligned with Capacity Management.

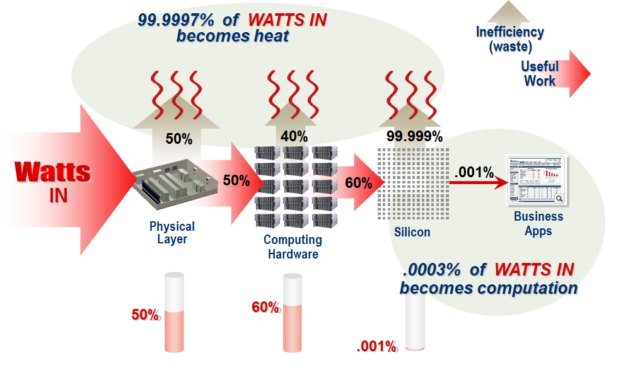

The figure above shows the places where the power provided to a data center is getting lost. From left to right, the data center facility is shown on the left, the IT hardware located in the facility is shown in the center and the microprocessor block in the IT hardware is shown on the right.

PUE – “Power Usage Effectiveness” – is a popular term in the data center literature. This criterion determines the facility performance as the power consumption criterion of the data center critical physical infrastructure.

A large part of the total facility losses results from inefficient electrical systems (e.g., UPS, power distribution), particularly due the consumption by critical cooling systems. PUE is defined as the ratio of the total annual facility consumption to the power delivered to the IT devices. As seen in the section representing the facility infrastructure at the bottom left of the figure, half of the power that enters the facility is transferred into the atmosphere as loss in this stage. (PUE = 2) The Uptime Institute 2020 DC Industry Survey states that the mean PUE value of observed data centers dropped from around 2.0 to 1.6 over time.

The definition and criteria of PUE were published by the Green Grid Consortium in 2006. It was then extended to cover alternative energy and cooling systems’ utility consumptions beside the electrical power, like water and other utility categories.

Due to the physical effects, almost all the power consumed by IT equipment hardware in data center white spaces results in a heat load in the room. The critical cooling system has to operate uninterruptedly to transfer this heat load to the atmosphere, and this cooling systems causes significant consumption.

The center and right of the figure above shows the power consumption distribution of an IT hardware item. Around 40% of the power supplied to the hardware is converted into heat through the support modules, in particular by the power modules, drivers, and cooling fan. 60% is consumed in the silicon cores, microprocessors. Considering that only signal power containing information flows out through the network connection at micro-Watt levels compared to the inflow of electrical power, it is easy to understand that IT hardware is “100%” inefficient. All the power supplied to the IT hardware is converted into heat and directly impacts the critical cooling consumption.

If the IT hardware was designed efficiently, high-capacity data centers could be possible without high consumption in critical cooling. The main reason of suffering with high critical cooling consumptions is the continuously power consuming IT hardware items that operate like electrical heaters.

IT hardware and operating software providers have significantly focused on hardware efficiency in recent years. They implemented operating software such as virtualization and structural changes such as “Blade” and improvements such as advanced fan control. Direct liquid cooling or immersion cooling methods are present to eliminate at least one medium transfer. SPEC (Standard Performance Evaluation Corporation) sets out a standard performance measurement method for servers – (SERT – Server Efficiency Rating Tool) – and provides information to consumers about the performances of well-known models of named brands based on practical measurements. In parallel, ASHRAE TC 9.9 (American Society of Heating, Refrigerating and Air-Conditioning Engineers) offers efficient methods and standards for the facility for optimizing service product lifecycle and critical cooling consumption.

There is obviously much to be done, particularly regarding the efficiency of IT hardware. Humans define every unidentified object based on something they know or associate. The human brain is considered as a computer with this metaphor. Although it is like comparing apples and pears, the power a brain of 1,4 kg consumes for reasoning is known to be 20 Watts. A 2U standard server which weighs 10 times as much can have 800 and 1600 W power modules. Thousands of similar hardware items are needed to reach the capacity of the brain. Popular articles have claimed that the brain is equivalent to 1 Gigawatt of data center capacity.

In order to function healthily, the human brain requires not cooling, but has to be heated by the human body to remain around 36 degrees. But keeping the inflow air temperature of servers at around 18-27 degrees requires critical cooling systems. Do not expect the IT hardware performs like a brain and consumes truly little energy. But it is apparent, that there is a big development gap to work out.